Fast and flexible: Human program induction in abstract reasoning tasks

Aysja Johnson, Wai Keen Vong, Brenden M. Lake and Todd M. Gureckis

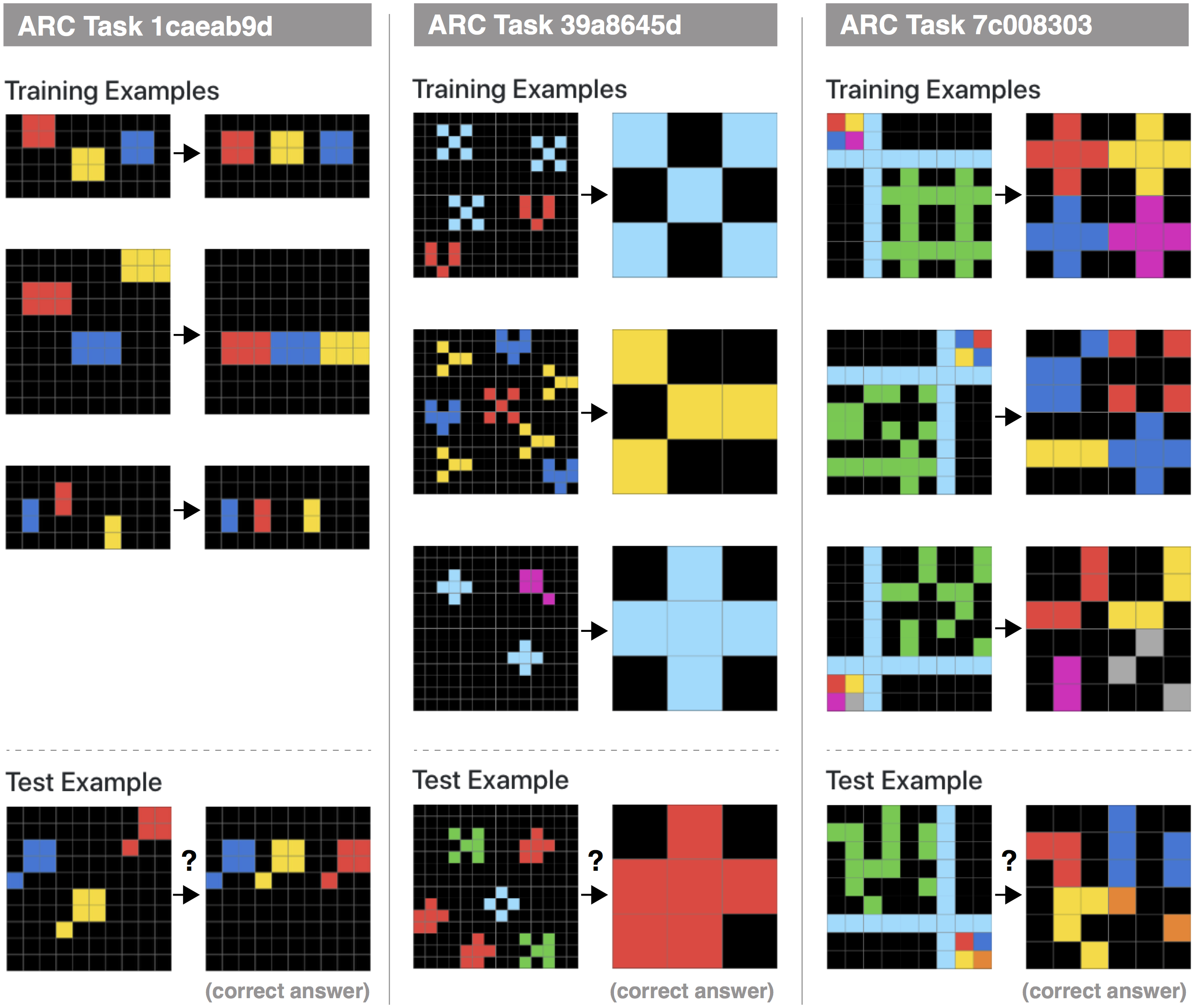

Figure 1: Three example ARC tasks. Each column contains a set of “training examples” and a “test example” for an ARC problem. The training examples contains a number of input patterns that point (→) to the corresponding output. Here there are always three input→output pairs but there can be fewer or more. The rules for transforming the input examples to the output examples are unique for each ARC task. The left task (which we refer to later as the box alignment task) requires aligning the red and yellow objects to the blue object along the vertical axis. The middle task requires counting each object and returning the most common object. The right task requires mapping the four colors in the 2x2 quadrant onto each quadrant of the green object. Understanding of the pattern is assessed with the text example. Here a single test input is provided and the agent has to create the expected output. The correct answer is displayed here for each problem but the agent does not have access to this.

Abstract

The Abstraction and Reasoning Corpus (ARC) is a challenging program induction dataset that was recently proposed by Chollet (2019). Here, we report the first set of results collected from a behavioral study of humans solving a subset of tasks from ARC (40 out of 1000). Although this subset of tasks contains considerable variation, our results showed that humans were able to infer the underlying program and generate the correct test output for a novel test input example, with an average of 80% of tasks solved per participant, and with 65% of tasks being solved by more than 80% of participants. Additionally, we find interesting patterns of behavioral consistency and variability within the action sequences during the generation process, the natural language descriptions to describe the transformations for each task, and the errors people made. Our findings suggest that people can quickly and reliably determine the relevant features and properties of a task to compose a correct solution. Future modeling work could incorporate these findings, potentially by connecting the natural language descriptions we collected here to the underlying semantics of ARC.

Publication

- Fast and flexible: Human program induction in abstract reasoning tasks

Data Visualizations

Click on the links below to explore participant responses to individual tasks

- 025d127b.json

- 150deff5.json

- 1caeab9d.json

- 1e0a9b12.json

- 1f876c06.json

- 1fad071e.json

- 2281f1f4.json

- 228f6490.json

- 31aa019c.json

- 39a8645d.json

- 3aa6fb7a.json

- 3af2c5a8.json

- 444801d8.json

- 47c1f68c.json

- 5c0a986e.json

- 6150a2bd.json

- 62c24649.json

- 6773b310.json

- 6c434453.json

- 6e82a1ae.json